Having the opportunity to create a 3D model provides a chance to take something from the world, the stuff of real life, and translate it into computable data for an operating system to create something from. As Raphael has already posted about, he, Tori, and I are part of a Digital Humanities and Art History collaborative project to model architectural sites from medieval Paris which I’ll talk a bit about here.

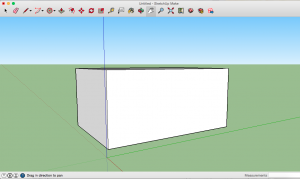

Snyder’s essay, Virtual Reality for Humanities Scholarship, lays down the basics for anyone who wants to tackle a DH project in 3D modeling and creating virtual reality. Her case studies on different virtual reality projects really capture what it is to work on one of these projects and highlight some of the problems that arise. Once the idea for a 3-dimensional project has been proposed, every decision after that point affects the final outcome. Even the choice of what software to use plays a huge role in the look and feel of the final product. (It’s important to note that not all modeling software is created equal; most offer very similar functions but there are special functions for each program and they each carry out tasks in different ways.) The Paris modeling project uses Vectorworks, a modeling system designed with architects in mind. Vectorworks and Google’s SketchUp, a program perhaps more people are familiar with, both offer 3D modeling services but Vectorworks seems much more sophisticated in layout and variety of functions offered. Perhaps this sophistication comes from the fact that I’m a novice in the 3D modeling world but it is true that Vectorworks has a large amount of specialized features for its users.

With SketchUp I was able to make this prism very intuitively without knowing much about the software already. I used the pencil tool to create a rectangle and then selected the tool that looks like a box with an arrow point up to make it 3-dimensional. There aren’t too many fancy buttons here but it gets the job done.

Vectorworks, however, is its own story. Basic functions like drawing shapes can be found in the Basic palette of tools off to the left, which is where I selected the rectangle tool to draw a 2D shape and then I had to extrude it to convert it to a 3D shape. At this point I know that I can use the shortcut command+E to extrude, but a few months ago I spent a good amount of time hunting through all the menus to find the function that would make my shapes 3D. Looking at all the options in Vectorworks, especially compared to those in SketchUp, can seem a little overwhelming since there are so many more specialized modeling features offered here.

After the software for a virtual reality project is chosen the researchers and modelers can begin translating the information they have into the beginnings of a 3D model. Much like in Snyder’s example of creating a virtual Florence from historical sources, the professors and researchers behind the Paris project have also compiled sources that range from primary documents, like letters, building plans, and engravings, to recent scholarly research, and photographs of surviving elements. Snyder hints at the complexities that even this beginning stage presents, but it is not until you actually have to sift through repositories of text and images to begin creating the foundation of a building, that the large scope of the project becomes clear.

I could go on for days about the importance of small details behind this DH project, and I’m sure Tori and Raphael could too, but there is so much more beyond this project that I don’t have the space to discuss, so I’ll end with this big-picture statement about the Paris project: one of the outcomes is to create models that will allow us to test the theories of Gothic historians, to see if they stand up to the test of recreation, and conversely, if our recreations stand up to the test of scholarly research.