In Diane Favro’s Meaning in Motion: A Personal Walk Through Historical Simulation Modeling at UCLA, she examines the advantages and disadvantages of three-dimensional modeling as means of historical analysis. Having taken Professor Favro’s class and actually having first-hand experience with her 3D model, I have experienced some of these advantages and disadvantages myself. Favro begins by citing Cicero, “wherever we walk, we set foot in some history”. She continues in her own words in context to ancient Romans, “A walk through a city was equivalent to, or even preferred to, reading a text. Buildings, statues, inscriptions, and urban occupants all operated as signifiers that elicited potent associations…the Romans imbued each place with a spirit or genius whose identity was shaped by past and future actions”.

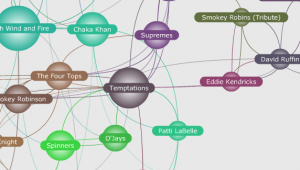

Favro talks about the development of HyperCities which came about in collaboration with USC, CUNY, and community partners. The software emerged from “a flash-based flash-based mapping project into a robust participatory, multimodal platform that brings together the analytic tools of GIS, the geo-markup language KML, and traditional methods of humanistic inquiry utilizing Google’s Map and Earth Application Programming Interface (API) released in 2005-06”.

With improved modeling, came further challenges. For one, by the viewer sitting and scrolling on a mouse to navigate, the model relies on “ocularcentracism” as its singular sense (ignores sound, smell, etc.). Simulating space, speed, processing, and temporal factors is also very difficult. Providing enough cultural context brought up many questions, “Did the Romans in the Forum privilege movement or sigh over smelling, or were all aspects experienced in a fog of urban distinction?” Ultimately, the advancement in representation posed an issue that is pertinent to all of the digital humanities field in general; “If a picture is worth a thousand words, and interactive 3D interaction is worth tens of thousands. Yet there is not agreement about scholarly assessment”. In other words, we do not yet have stable criteria to approach the analysis of these moving, interactive experiences.

Although this is not an example of an actual historical 3D reconstruction, a story in Italo Calvino’s Invisible Cities really struck me as an abstract example of what Favro is talking about. Calvino’s novel is split into short descriptions/stories of seemingly impossible cities. The city that reminded me of Favro’s research outlook is called Zobeide;

Men of various nations had an identical dream. They saw a woman running at night through an unknown city; she was seen from behind with long hair, and she was naked. They dreamed of pursuing her. As they twisted and turned, each of them lost her. After the dream the set out in search of that city; they never found it, but they found one another; they decided to build a city like the one in the dream. In laying out the streets, each followed the course of his pursuit; at the spot where they had lost the fugitive’s trail, they arranged the spaces and walls differently from the dream, so she would be unable to escape again.

The story of Zobeide offers a conceptual example of formatting influenced space in reality. Space is informed by experience – how do we realize this in three dimensional space? I read Invisible Cities for a studio class in which we were then asked to draw maps of the cities we chose. I didn’t choose Zobeide but the representations were strong attempts at representing two-dimensional maps of a conceptual city. I would love to see how someone might map this city in three dimensional space…